EXTRAScan is a ground-breaking audiovisual projection tool, developed by the team behind EXTRAsync.

EXTRAsync is an inter-disciplinary platform that develops innovative projects and devices within the Audiovisual culture.

Of course when we found out about this new device we asked the developers Marco Monfardini, Gianluca Sibaldi and Amelie Duchow for an interview. And here you got it!

1. We were truly excited when you first showed us this new audiovisual projection tool you have developed. Can you tell us how you came up with the idea of the EXTRAscan?

Marco: My initial idea dates back to a few years ago, when I wanted to create an instrument that could generate sounds and visuals by using video projection on any given surface.

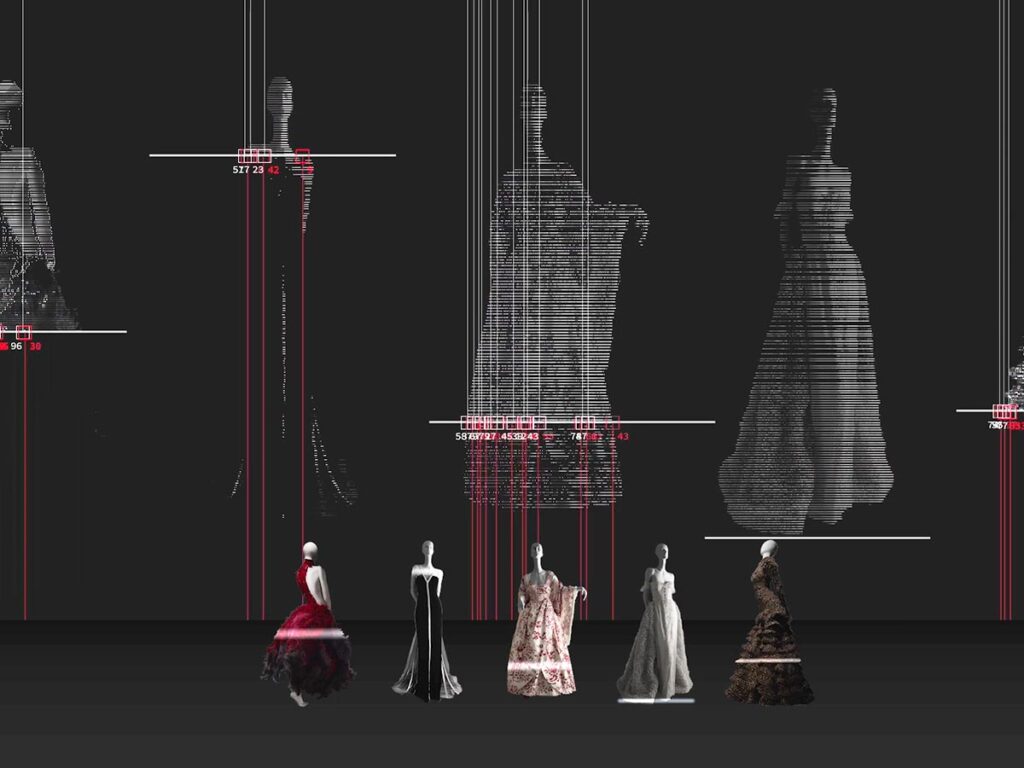

DresScanI wanted to experiment a new way of both visual and sound composition. After a long time of fruitless research I was almost on the verge of giving up.

However I told Gianluca about my idea, knowing him as a soundtrack composer and ever since a “computer alchemist”. Combining these two types of experiences, we began to transform our imagination into reality.

The first steps were exhilarating and pushed us to completely immerse ourselves in creating this new “device”.

2. Technically speaking, how does the audiovisual scan work? What kind of technological set up does it require?

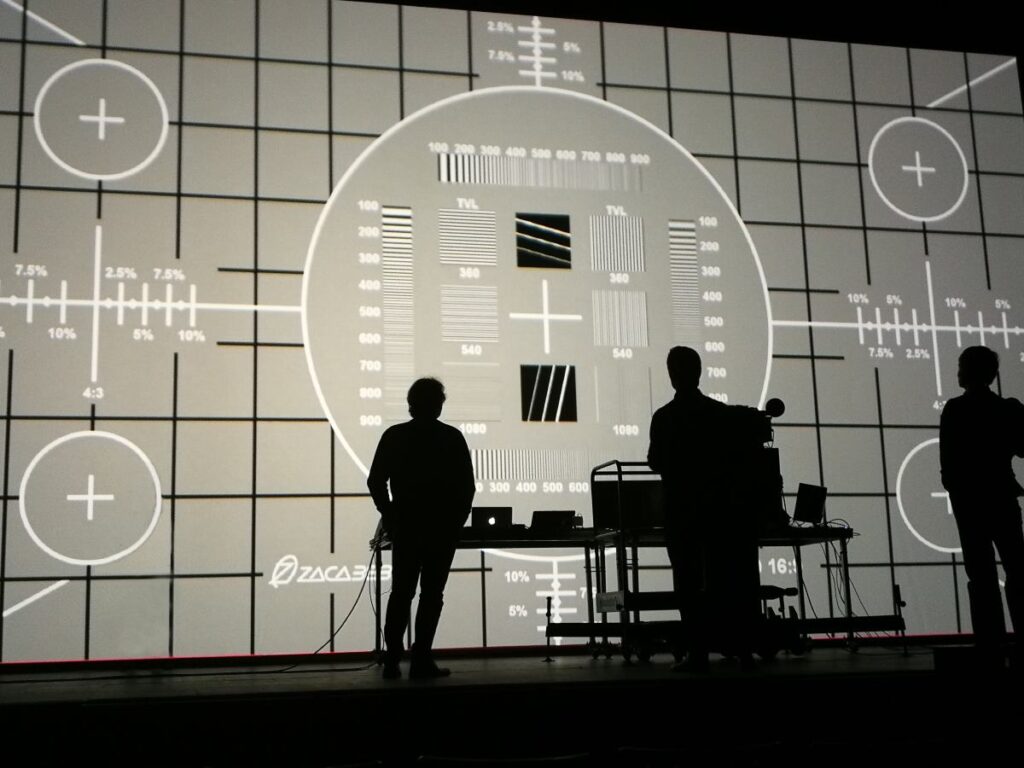

Gianluca: this is a “slow” version of a structured light projection, a technique commonly used to perform 3D scans. We project lines on the scanned object by using a video projector. It then detects shapes and colors through 4 specially modified cameras.

PHOTO CREDITS: Elena de la PuenteThe collected data information is processed in real time via a network of 5 computers. We use a mix of standard visualization algorithms and others we developed ourselves. Each computer has a specific task and contributes to the final audio and video output.

3. This cutting-edge tool is designed for live performances. Can you describe how the live manipulation works, once receiving the data parameters from the projector scanner?

We designed this tool to be independent, playing sound and generate images based on what it “reads”.

During a live performance we use a basic structure created ad hoc for the hosting venue. We modify the parameters according to the evolution of the performance while also modifying the structure itself in real-time. Both the audio and the video elements undergo a continuous elaboration.

PHOTO CREDITS: Mind the Film4. In terms of artistic expression, what are the creative possibilities you discovered by utilizing this tool as audiovisual artists?

It is a tool for exploring and reading reality. It extracts features that are usually undetectable through a cross-contamination between visual elements that generate sound and sound elements that have visual correspondences.

This transports us into an additional dimension that becomes itself an object of stimulation and creation.

5. Could you share with us the audiovisual projects you have been working on thanks to this technology, both in the artistic and corporate world?

We have developed several projects with EXTRAscan in collaboration with both corporate and artistic entities.

Porsche recently commissioned us a live scanning for the launch of the new electric Taycan 4S. We presented a performance at the Scuola Holden in Torino in collaboration with Garofalo & Idee Associate.

For the Bonotto Foundation we developed a study for an audiovisual installation. For the LEV Festival 2019 we have developed the site specific live performance SCANAUDIENCE.

6. You have showcased the EXTRAscan at LEV festival 2019, by delivering an audiovisual representation of the audience called SCANAUDIENCE. Can you tell us about this remarkable performance?

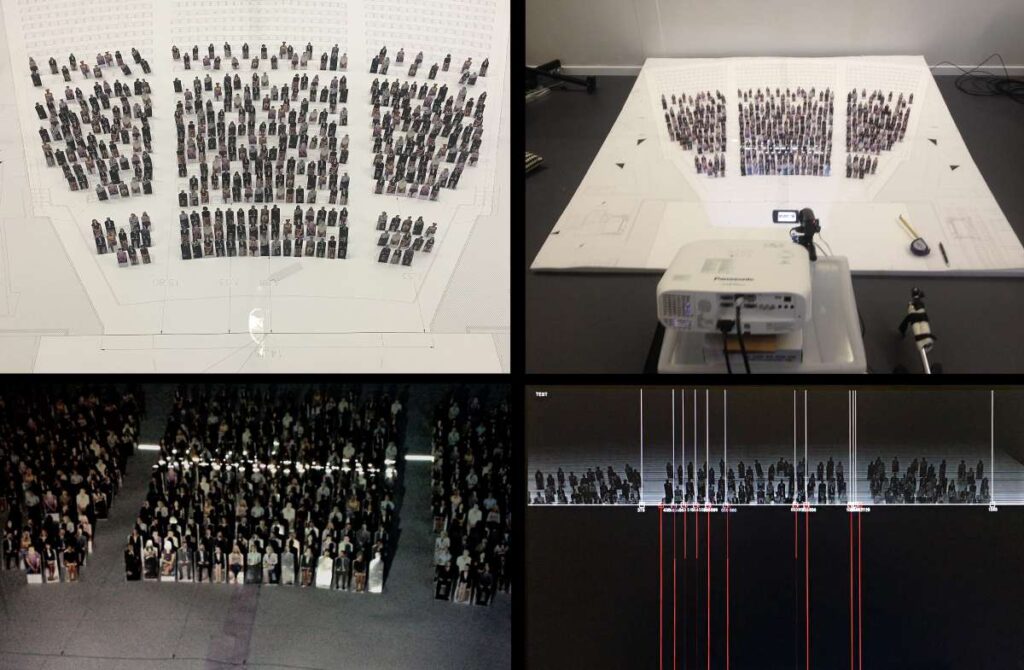

SCANAUDIENCE was born from the study of the human figure and the idea of reversing the roles of a common performance. The audience itself is the protagonist of the performance. They are the subject of the scan and thus directly determining the audio and video result.

In order to develop SCANAUDIENCE at LEV Festival we built a scale model of the Laboral theater. The model included an audience made of small paper dolls that we used to fine-tune the performance.

PHOTO CREDITS: EXTRASyncThe technical realization of SCANAUDIENCE is a fairly complex operation. Therefore we had to foresee the characteristics of both the venue and the array of the people to optimize timings for the sound check.

The result greatly exceeded our expectations, thanks to a large number of visitors and excellent technical support from the LEV organization.

Before our premiere set at LEV we did not have the opportunity to do a rehearsal with about 1000 people at the same time.

We had a rough idea of the outcome thanks to our model construction, but actually the real result was far more exciting and surprising for both us and the audience.

SCANAUDIENCE at the Laboral theater revealed a further dimension in addition to the sound generation and video output represented on the screen.

The scan lines projected onto the audience became themselves an additional spectacle inside the main show: an evocative feedback of the action of the “machine”.

7. Can you give us some insights about any further developments you are planning for the EXTRAscan?

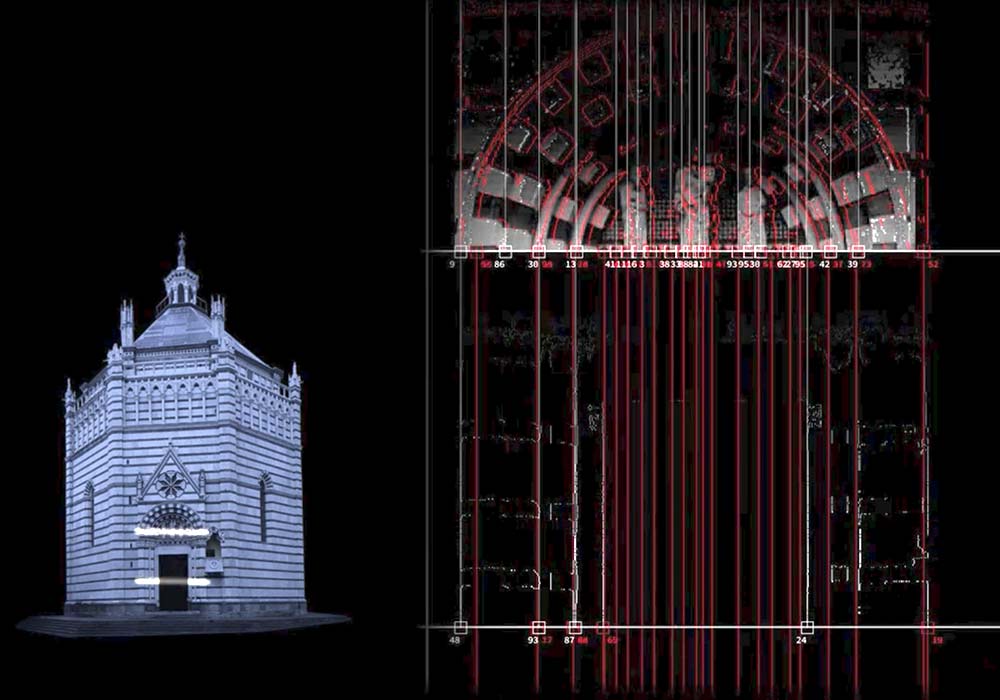

We are currently studying the development for large scale scanning of buildings, monuments and architectural elements. We have worked on a draft of “innerVoices”. It is project that “gives voice” to historic buildings and other elements within the context of urban development.

Another area of development is the contemporary design. We aim to carry out the scanning of design objects in a more “chamber music” dimension, as they were generative elements of a small ensemble of voices and instruments.

From a technical standpoint, we are designing a new software that is even more advanced and offers a more immediate way of work.

We have recently started the development of new cameras for EXTRAscan. By integrating many functions directly into the camera software, we are automating many delicate calibration operations that had to be done manually.